Mastering Llama 3.3 – A Deep Dive into Running Local LLMs

I compared Llama 3.3 with older models like 3.170B and Phi 3, tested Microsoft Graph API integrations using OpenAPI specs, and explored function calling quirks. The results? Not all models are created equal!

🚀 Introduction

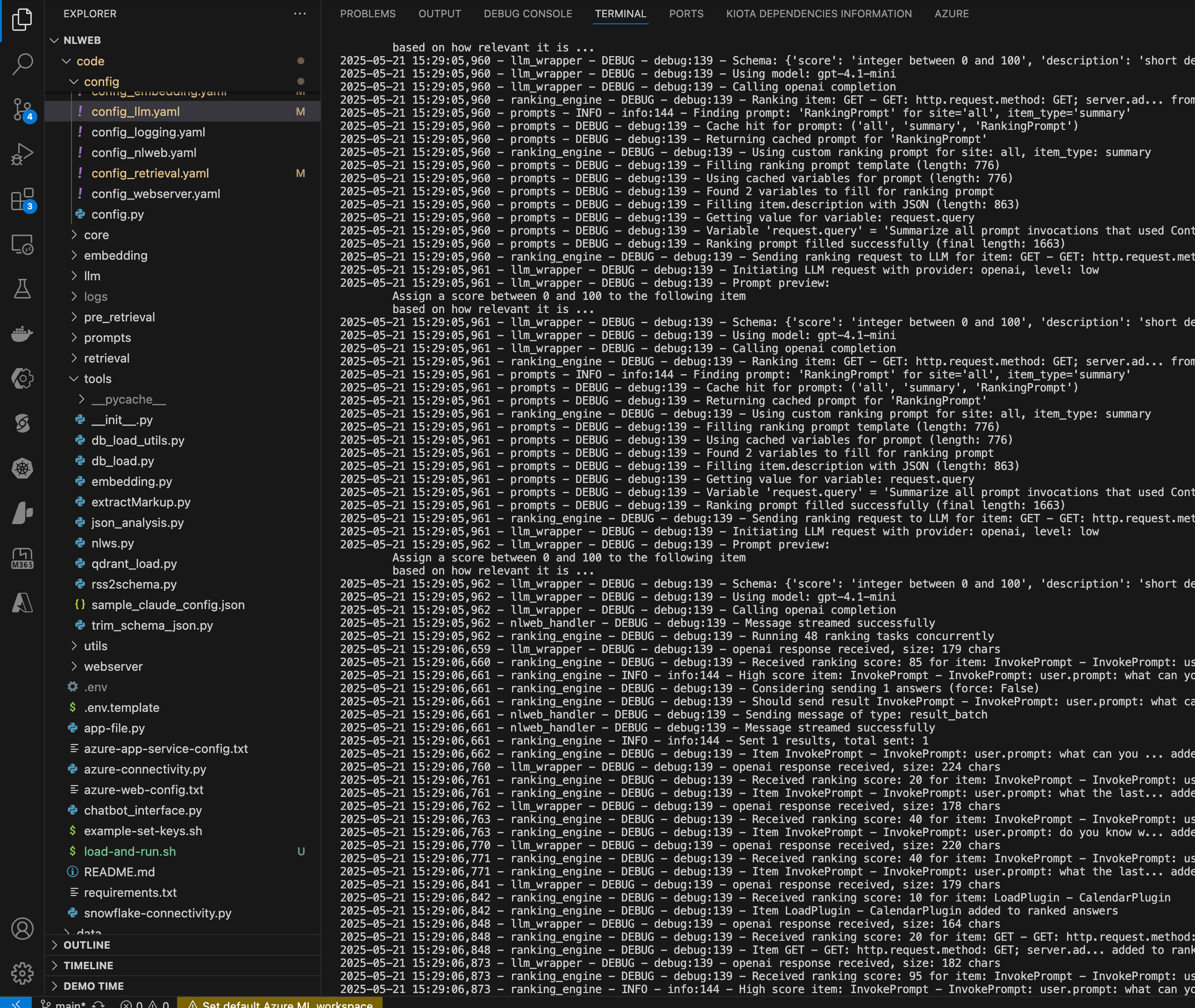

Over the holiday break, I decided to dive deep into Llama 3.3, running it on my MacBook Pro M3 Max (128GB RAM, 40-core GPU). What started as curiosity quickly turned into a full exploration of local AI models, Semantic Kernel, and API integrations using Microsoft Graph.

In this post, I’ll walk you through my setup, the performance differences between Llama 3.3 and other models like Llama 3.170B, and the practical lessons learned along the way.

🛠️ Setup and Tools

Here’s what I used:

- Hardware: MacBook Pro M3 Max (128GB RAM, 40-core GPU)

- Models: Llama 3.3, Llama 3.170B, Phi 3

- APIs: Microsoft Graph API using OpenAPI specs

- Framework: Semantic Kernel with auto-invoked functions for weather and time plugins

I also leveraged Graph Explorer for testing Microsoft Graph API endpoints, which simplified querying and verifying results.

📊 Key Comparisons – Llama 3.3 vs Llama 3.170B

Running Llama 3.3 locally was a significant improvement over 3.170B. Performance was faster, and responses were more refined. However, I discovered that while Llama 3.3 supports function calling, not all API integrations worked seamlessly.

Example:

- Llama 3.3 (Function Calling Enabled): Managed to invoke functions via Semantic Kernel but hit limitations with nested queries (e.g., Microsoft Graph API).

- Phi 3: No function calling support – this was clearly noted during testing.

🤖 Real-World Example – Microsoft Graph API Integration

One of my goals was to retrieve contact details using Graph API, leveraging the OpenAPI spec. While the initial integration worked, invoking the API through Llama 3.3 wasn’t as straightforward. The model struggled with nested properties and query parameters, unlike GPT-4, which handled it effortlessly.

🎭 The Role of Plugins

To enhance functionality, I added plugins for:

- Weather Forecasts

- Local Time Retrieval

Llama 3.3 correctly identified which plugin to use based on the prompt but lacked streaming capabilities when invoking functions directly. This required creative solutions – running the initial call non-streaming and then invoking streaming for the assistant’s commentary.

💡 Key Takeaways

- Not All Models Are Equal – Llama 3.3 outperforms older models but still has limitations.

- Function Calling Quirks – Direct function calls stream data, but auto-invoked functions return in bulk.

- Hardware Matters – A MacBook M3 Max handles local LLMs smoothly, but performance varies by model.

- API Complexity – Simple APIs (like weather) work well, but complex APIs (like Graph) require more finesse.

🎬 Watch the Full Video

For a step-by-step walkthrough and live demos, check out the full video:

👉 https://youtu.be/q_2BWCCnSS4

Thanks for reading, and happy experimenting with local LLMs!

Happy coding!

Chat about this?

| Engage with me | Click |

|---|---|

| BlueSky | @fabianwilliams |

| Fabian G. Williams |

Or use the share buttons at the top of the page! Thanks

Cheers! Fabs